1. How many "levels" of colors are needed for the animation as a whole?

2. How can we leverage the average human's visual perception to add or subtract levels of different colors?

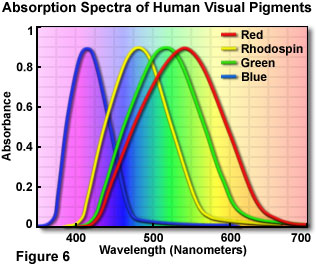

While I've dived into item #1 with only partial success, I think I'm on to something comparable with item #2. According to this article (and others), especially the graph below, humans have a harder time seeing blue than red and green, and green seems to be the easiest color to spot. (I am going to assume this applies to differences in color levels for RGB as well.) Due to the "blue" cone's absorbance towards violet (blue+red), rather than true blue, this gives red an advantage, but not as much as green.

Unfortunately, I do not have a more scientific set of mathematical ratios, but for the time being, I can use a 2:1 ratio for G:R, and a 2:1 ratio for R:B. Going back to the plane.avi, we can compare a single-level vs tri-level ordered dither:

Code: Select all

$ convert plane.avi -ordered-dither o8x8,12,24,6 -append -format %k info:

102

$ convert plane.avi -ordered-dither o8x8,12 -append -format %k info:

73

$ convert plane.avi -ordered-dither o8x8,14 -append -format %k info:

92

$ convert plane.avi -ordered-dither o8x8,15 -append -format %k info:

109

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,15 +map plane_od1.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,12,24,6 +map plane_od2.gif

$ cat > test.html

<img src="plane_od1.gif">

<img src="plane_od2.gif">

^D

$Code: Select all

$ convert plane.avi -ordered-dither o8x8,23 -append -format %k info:

235

$ convert plane.avi -ordered-dither o8x8,23,46,11 -append -format %k info:

288

$ convert plane.avi -ordered-dither o8x8,23,35,15 -append -format %k info:

252

$ convert plane.avi -ordered-dither o8x8,21,42,10 -append -format %k info:

247

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,23 +map plane_od1.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,23,35,15 +map plane_od_tri1.5.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,21,42,10 +map plane_od_tri2.0.gif

$ cat > test.html

<img src="plane_od1.gif">

<img src="plane_od_tri1.5.gif">

<img src="plane_od_tri2.0.gif">

^D

$Code: Select all

$ convert plane.avi -ordered-dither o8x8,18,27,12 -append -format %k info:

160

$ convert plane.avi -ordered-dither o8x8,18,36,9 -append -format %k info:

188

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,18,27,12 +map plane_od_tri1.5.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,18,36,9 +map plane_od_tri2.0.gifCode: Select all

$ convert plane.avi -ordered-dither o8x8,15,23,10 -append -format %k info:

128

$ convert plane.avi -ordered-dither o8x8,15,30,8 -append -format %k info:

145

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,15,23,10 +map plane_od_tri1.5.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,15,30,8 +map plane_od_tri2.0.gifCode: Select all

$ convert plane.avi -ordered-dither o8x8,15 -append -format %k info:

109

$ convert plane.avi -ordered-dither o8x8,18 -append -format %k info:

149

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,18 +map plane_od2.gif

$ convert -quiet -delay 1 plane.avi -ordered-dither o8x8,15 +map plane_od3.gif

$ cat > test.html

23: <img src="plane_od1.gif"><br>

18: <img src="plane_od2.gif"><br>

15: <img src="plane_od3.gif">

<img src="plane_od_tri1.5.gif">

<img src="plane_od_tri2.0.gif">So, in summary, this is a valid technique to reduce the size of the animation without reducing the quality, or if you prefer, raising the quality of the image without raising the size of the animation. However, if anybody has any other clues to item #1 and the "official" ratios, I'd like to improve upon this.